Generative Pre-trained Transformer 3 (GPT-3) was released by OpenAI in July 2020. Since then there has been much hype around it. In this post, I will discuss what it is and how does it impact the future of AI, especially from a developer's perspective.

What is GPT-3?

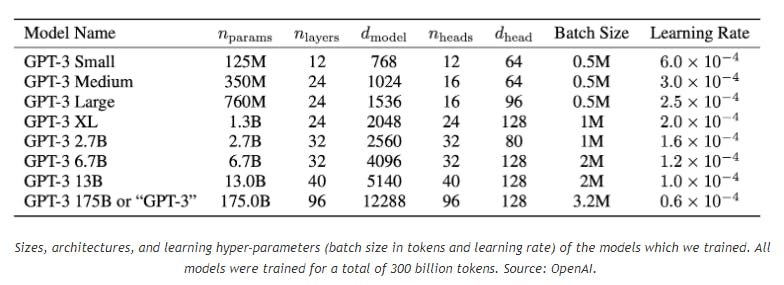

GPT-3 is a large open-source state-of-the-art language model with more than 175 billion parameters which is quite a big number compared to its predecessor GPT-2 which "only" had 1.5 billion.

A parameter is like measurement in a neural network that gives some weight to few aspects of data, for providing that aspect larger or smaller importance which will impact the entire measurement of the data.

GPT-3 was first introduced in May 2020 & was available for beta testing from July. Before this, the biggest language model was Microsoft's Turing NLG which was trained on 17 billion parameters.

Due to the data, it is trained on, it is able to generate text that is correct both grammatically and contextually.

What does it offer?

GPT-3 is capable to perform language translations, 3-digit arithmetic, question-answering, generate programming code from the description, as well as several other tasks that require reasoning or domain expertise. The OpenAI researchers stated in their paper. “We find that GPT-3 can generate samples of news articles which human evaluators have difficulty distinguishing from articles written by humans.”

Here are some simple examples:

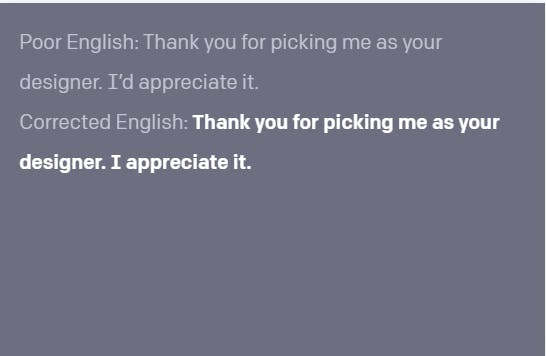

A grammatically wrong English sentence was written and GPT-3 corrected it (written in bold)

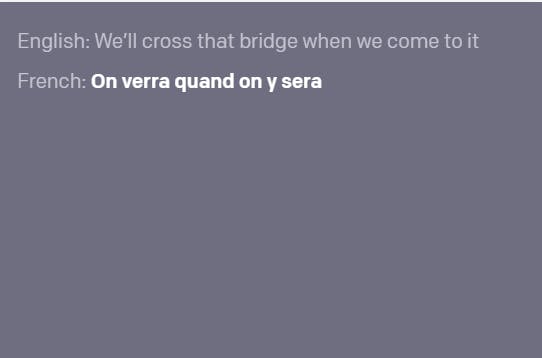

Translating English language to french

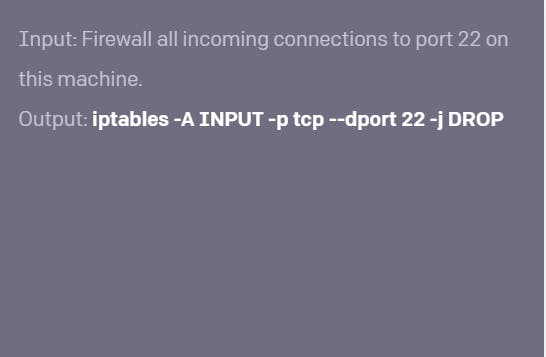

Getting a bash command from textual description

Conclusion

Apart from a huge technical achievement in the field of AI, there is one more interesting fact about it that it's available as an API.

Being able to run a neural network like this is no easy as it needs expensive infrastructure to keep it running, and if you want to use it at scale it’s a different challenge altogether.

And people who are talking about replacing the developers' jobs, according to me, that is not going to happen anytime soon because after all it is a trained machine & it lacks creativity and problem-solving ability.

Whereas software engineering is a vast field that covers an entire process, from building algorithms, writing code, and integrating it (software) with hardware and IT infrastructure and so far no machine has been invented to do so with as much accuracy as needed.

Overall, GPT-3 is a huge step in the AI space, especially for natural language generation, and going forward it will help multiple industries. The biggest application in my opinion will be in chatbots, using its API we can enhance the conversational experience & can take it to whole new levels.